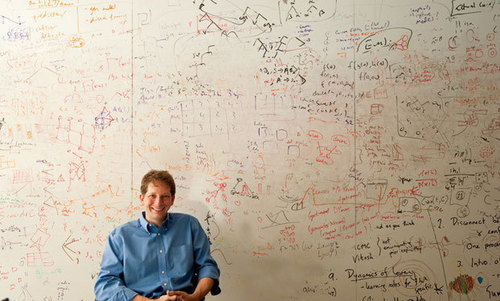

Joshua Tenebaum’s early research on concept learning might be useful for FAI development.

His PhD thesis A Bayesian Framework for Concept Learning contains an approach to locating concepts that is designed to allow AIs to pinpoint and generalize concepts closer to how well humans do from limited example sets.

It’d be nice if future superintelligences don’t automatically and immediately veer off into unintended consequences as soon as they exert greater than human optimization pressure on human concepts. This work will almost certainly not be enough, but it’s better than having an even more brittle grasp on concepts during an AGI ascent.

See on web.mit.edu